HUAWEI CG Kit提供一套基于Vulkan图形接口的高性能渲染框架,具备PBR材质,模型,纹理,光照,组件等系统。此渲染框架针对华为DDK特性及实现细节进行专属设计,提供华为平台最优的3D渲染能力。此渲染框架具备二次开发能力,可以大大降低应用开发者的开发难度和复杂度,提高开发效率。

开发者需要:

在这个Codelab中,你将使用已经创建好的Demo Project实现对HUAWEI CG Kit API的调用,通过Demo Project你可以体验到:

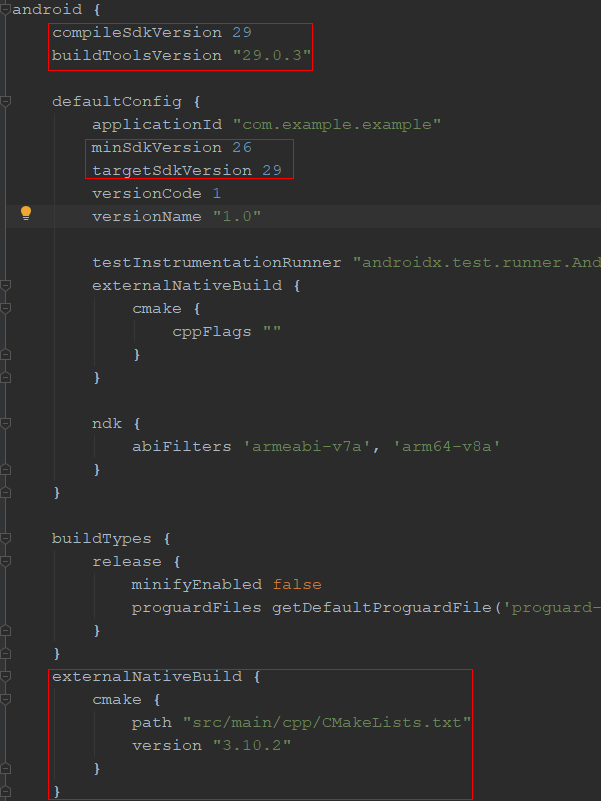

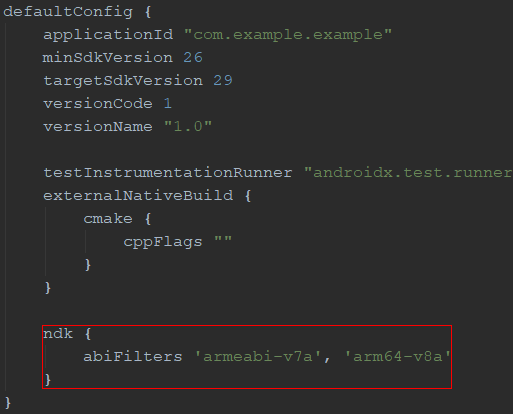

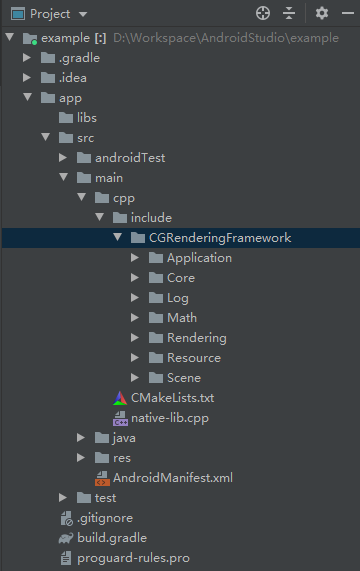

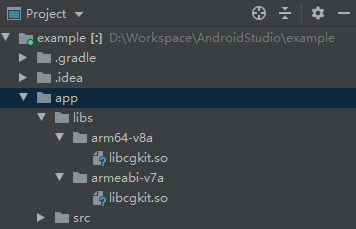

集成HUAWEI CG Kit能力,需要完成以下准备工作:

同时配置NDK过滤项。

cmake_minimum_required(VERSION 3.4.1)

include_directories(

${CMAKE_SOURCE_DIR}/include/CGRenderingFramework )

include_directories(

${CMAKE_SOURCE_DIR}/include/MainApplication )

add_library(

main-lib

SHARED

source/Main.cpp

source/MainApplication.cpp)

ADD_LIBRARY(

cgkit

SHARED

IMPORTED)

set_target_properties(cgkit

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../../../libs/${ANDROID_ABI}/libcgkit.so

)

SET(

VULKAN_INCLUDE_DIR

"$ENV{VULKAN_SDK}/include")

#"${ANDROID_NDK}/sources/third_party/vulkan/src/include")

include_directories(${VULKAN_INCLUDE_DIR})

SET(

NATIVE_APP_GLUE_DIR

"${ANDROID_NDK}/sources/android/native_app_glue")

FILE(

GLOB NATIVE_APP_GLUE_FILLES

"${NATIVE_APP_GLUE_DIR}/*.c"

"${NATIVE_APP_GLUE_DIR}/*.h")

ADD_LIBRARY(native_app_glue

STATIC

${NATIVE_APP_GLUE_FILLES})

TARGET_INCLUDE_DIRECTORIES(

native_app_glue

PUBLIC

${NATIVE_APP_GLUE_DIR})

find_library(

log-lib

log )

target_link_libraries(

main-lib

cgkit

native_app_glue

android

${log-lib} )

SET(

CMAKE_SHARED_LINKER_FLAGS

"${CMAKE_SHARED_LINKER_FLAGS} -u ANativeActivity_onCreate")

width=xxx (每个面纹理像素宽度)

height=xxx (每个面纹理像素高度)

depth=xxx (每个面纹理像素深度)

mipmap=xxx (MipMap层级数量)

face=xxx (立方体的面的数量)

channel=xxx(每个面纹理的RGBA通道数,如4)

suffix=xxx (每个面纹理的格式,如.png)

cubeface_neg_xi(立方体的左侧面)

cubeface_neg_yi(立方体的底面)

cubeface_neg_zi(立方体的前侧面)

cubeface_pos_xi(立方体的右侧面)

cubeface_pos_yi(立方体的顶面)

cubeface_pos_zi(立方体的后侧面)

layout(location=0) in vec3 position;

layout(location=1) in vec2 texcoord;

layout(location=2) in vec3 normal;

layout(location=3) in vec3 tangent;

layout(set=0, binding=0) uniform GlobalUniform

{

mat4 view;

mat4 projection;

mat4 projectionOrtho;

mat4 viewProjection;

mat4 viewProjectionInv;

mat4 viewProjectionOrtho;

vec4 resolution;

vec4 cameraPosition;

}

#define MAX_FORWARD_LIGHT_COUNT = 16

#define DIRECTIONAL_LIGHT 0

#define POINT_LIGHT 1

#define SPOT_LIGHT 2

struct LightData

{

// xyz为颜色信息,w为强度信息

vec4 color;

// xyz为位置信息

vec4 position;

// xyz为方向信息

vec4 direction;

// x为类型信息

vec4 type_angle;

}

layout(set=0, binding=6) uniform LightsInfos

{

LightData lights[MAX_FORWARD_LIGHT_COUNT];

// 当前光源个数

uint count;

}

albedoTexture(基本贴图,对应Material的TEXTURE_TYPE_ALBEDO类型)

normalTexture(法线贴图,对应Material的TEXTURE_TYPE_NORMAL类型)

pbrTexture(pbr参数贴图(x通道为ao数据,y通道为粗糙度数据,z通道为金属的数据),对应Material的TEXTURE_TYPE_PBRTEXTURE类型)

emissionTexture(自发光贴图,对应Material的TEXTURE_TYPE_EMISSION类型)

envTexture(环境贴图,对应Material的TEXTURE_TYPE_ENVIRONMENTMAP类型)

void android_main(android_app* state)

{

// 实例化演示Demo主界面

auto app = CreateMainApplication();

if (app == nullptr) {

return;

}

// 启动平台渲染

app->Start(reinterpret_cast<void*>(state));

// 启动渲染主流程

app->MainLoop();

CG_SAFE_DELETE(app);

}

void MainApplication::Start(void* param)

{

BaseApplication::Start(param);

// 这里设置日志等级为LOG_VERBOSE,用以覆盖cgkit默认的日志级别

// 请注意调用顺序,用户自定义的日志级别应该在cgkit的Start之后,否则会被覆盖

Log::SetLogLevel(LOG_VERBOSE);

}

void MainApplication::InitScene()

{

LOGINFO("MainApplication InitScene.");

BaseApplication::InitScene();

// step 1:Add camera

LOGINFO("Enter init main camera.");

SceneObject* cameraObj = CG_NEW SceneObject(nullptr);

if (cameraObj == nullptr) {

LOGERROR("Failed to create camera object.");

return;

}

Camera* mainCamera = cameraObj->AddComponent<Camera>();

if (mainCamera == nullptr) {

CG_SAFE_DELETE(cameraObj);

LOGERROR("Failed to create main camera.");

return;

}

const f32 FOV = 60.f;

const f32 NEAR = 0.1f;

const f32 FAR = 500.0f;

const Vector3 EYE_POSITION(0.0f, 0.0f, 0.0f);

cameraObj->SetPosition(EYE_POSITION);

mainCamera->SetProjectionType(ProjectionType::PROJECTION_TYPE_PERSPECTIVE);

mainCamera->SetPerspective(FOV, gCGKitInterface.GetAspectRatio(), NEAR, FAR);

mainCamera->SetViewport(0, 0, gCGKitInterface.GetScreenWidth(), gCGKitInterface.GetScreenHeight());

gSceneManager.SetMainCamera(mainCamera);

}

void MainApplication::InitScene()

{

// step 2:Load default model

// 此处可替换成您生成的模型数据存放路径

String modelName = "models/Avatar/body.obj";

Model* model = dynamic_cast<Model*>(gResourceManager.Get(modelName));

// step 3:New SceneObject and add SceneObject to SceneManager

MeshRenderer* meshRenderer = nullptr;

SceneObject* object = gSceneManager.CreateSceneObject();

if (object != nullptr) {

// step 4:Add MeshRenderer Component to SceneObject

meshRenderer = object->AddComponent<MeshRenderer>();

// step 5:Relate model's submesh to MeshRenderer

if (meshRenderer != nullptr && model != nullptr && model->GetMesh() != nullptr) {

meshRenderer->SetMesh(model->GetMesh());

} else {

LOGERROR("Failed to add mesh renderer.");

}

} else {

LOGERROR("Failed to create scene object.");

}

if (model != nullptr) {

const Mesh* mesh = model->GetMesh();

if (mesh != nullptr) {

LOGINFO("Model submesh count %d.", mesh->GetSubMeshCount());

LOGINFO("Model vertex count %d.", mesh->GetVertexCount());

// step 6:Load Texture

String texAlbedo = "models/Avatar/Albedo_01.png";

String texNormal = "models/Avatar/Normal_01.png";

String texPbr = "models/Avatar/Pbr_01.png";

String texEmissive = "shaders/pbr_brdf.png";

u32 subMeshCnt = mesh->GetSubMeshCount();

for (u32 i = 0; i < subMeshCnt; ++i) {

SubMesh* subMesh = mesh->GetSubMesh(i);

if (subMesh == nullptr) {

LOGERROR("Failed to get submesh.");

continue;

}

// step 7:Add Material

Material *material = dynamic_cast<Material*>(

gResourceManager.Get(ResourceType::RESOURCE_TYPE_MATERIAL));

if (material == nullptr) {

LOGERROR("Failed to create new material.");

return;

}

material->Init();

material->SetSubMesh(subMesh);

material->SetTexture(TextureType::TEXTURE_TYPE_ALBEDO, texAlbedo);

material->SetSamplerParam(TextureType::TEXTURE_TYPE_ALBEDO, SAMPLER_FILTER_BILINEAR, SAMPLER_FILTER_BILINEAR,

SAMPLER_MIPMAP_BILINEAR, SAMPLER_ADDRESS_CLAMP);

material->SetTexture(TextureType::TEXTURE_TYPE_NORMAL, texNormal);

material->SetSamplerParam(TextureType::TEXTURE_TYPE_NORMAL, SAMPLER_FILTER_BILINEAR, SAMPLER_FILTER_BILINEAR,

SAMPLER_MIPMAP_BILINEAR, SAMPLER_ADDRESS_CLAMP);

material->SetTexture(TextureType::TEXTURE_TYPE_PBRTEXTURE, texPbr);

material->SetSamplerParam(TextureType::TEXTURE_TYPE_PBRTEXTURE, SAMPLER_FILTER_BILINEAR, SAMPLER_FILTER_BILINEAR,

SAMPLER_MIPMAP_BILINEAR, SAMPLER_ADDRESS_CLAMP);

material->SetTexture(TextureType::TEXTURE_TYPE_EMISSION, texEmissive);

material->SetSamplerParam(TextureType::TEXTURE_TYPE_EMISSION, SAMPLER_FILTER_BILINEAR, SAMPLER_FILTER_BILINEAR,

SAMPLER_MIPMAP_BILINEAR, SAMPLER_ADDRESS_CLAMP);

material->SetTexture(TextureType::TEXTURE_TYPE_ENVIRONMENTMAP, m_envMap);

material->SetSamplerParam(TextureType::TEXTURE_TYPE_ENVIRONMENTMAP, SAMPLER_FILTER_BILINEAR, SAMPLER_FILTER_BILINEAR,

SAMPLER_MIPMAP_BILINEAR, SAMPLER_ADDRESS_CLAMP);

material->AttachShaderStage(ShaderStageType::SHADER_STAGE_TYPE_VERTEX, "shaders/pbr_vert.spv");

material->AttachShaderStage(ShaderStageType::SHADER_STAGE_TYPE_FRAGMENT, "shaders/pbr_frag.spv");

material->SetCullMode(CULL_MODE_NONE);

material->SetDepthTestEnable(true);

material->SetDepthWriteEnable(true);

material->Create();

meshRenderer->SetMaterial(i, material);

}

} else {

LOGERROR("Failed to get mesh.");

}

} else {

LOGERROR("Failed to load model.");

}

m_sceneObject = object;

if (m_sceneObject != nullptr){

m_sceneObject->SetPosition(SCENE_OBJECT_POSITION);

m_sceneObject->SetScale(SCENE_OBJECT_SCALE);

}

m_objectRotation = Math::PI;

}

void MainApplication::InitScene()

{

// step 8:create sky box

SceneObject* skyboxObj = CreateSkybox();

if(skyboxObj != nullptr) {

skyboxObj->SetScale(Vector3(100.f, 100.f, 100.f));

}

}

SceneObject* MainApplication::CreateSkybox()

{

String modelName = "models/test-cube.obj";

Model* model = dynamic_cast<Model *>(gResourceManager.Get(modelName));

const Mesh* mesh = model->GetMesh();

// load Texture

u32 subMeshCnt = mesh->GetSubMeshCount();

// Add to scene

SceneObject* sceneObj = gSceneManager.CreateSceneObject();

MeshRenderer* meshRenderer = sceneObj->AddComponent<MeshRenderer>();

meshRenderer->SetMesh(model->GetMesh());

for (u32 i = 0; i < subMeshCnt; ++i) {

SubMesh* subMesh = mesh->GetSubMesh(i);

// add Material

Material* material = dynamic_cast<Material*>(gResourceManager.Get(ResourceType::RESOURCE_TYPE_MATERIAL));

material->Init();

material->SetSubMesh(subMesh);

material->SetTexture(TextureType::TEXTURE_TYPE_ENVIRONMENTMAP, m_envMap);

material->SetSamplerParam(TextureType::TEXTURE_TYPE_ENVIRONMENTMAP, SAMPLER_FILTER_BILINEAR, SAMPLER_FILTER_BILINEAR,

SAMPLER_MIPMAP_BILINEAR, SAMPLER_ADDRESS_CLAMP);

material->AttachShaderStage(ShaderStageType::SHADER_STAGE_TYPE_VERTEX, "shaders/sky_vert.spv");

material->AttachShaderStage(ShaderStageType::SHADER_STAGE_TYPE_FRAGMENT, "shaders/sky_frag.spv");

material->SetCullMode(CULL_MODE_NONE);

material->SetDepthTestEnable(true);

material->SetDepthWriteEnable(true);

material->Create();

meshRenderer->SetMaterial(i, material);

}

sceneObj->SetScale(Vector3(1.f, 1.f, 1.f));

sceneObj->SetPosition(Vector3(0.0f, 0.0f, 0.0f));

sceneObj->SetRotation(Vector3(0.0f, 0.0, 0.0));

return sceneObj;

}

void MainApplication::InitScene()

{

// step 9:Add light

LOGINFO("Enter init light.");

SceneObject* lightObject = CG_NEW SceneObject(nullptr);

if (lightObject != nullptr) {

Light* lightCom = lightObject->AddComponent<Light>();

if (lightCom != nullptr) {

lightCom->SetColor(Vector3::ONE);

const Vector3 DIRECTION_LIGHT_DIR(0.1f, 0.2f, 1.0f);

lightCom->SetDirection(DIRECTION_LIGHT_DIR);

lightCom->SetLightType(LIGHT_TYPE_DIRECTIONAL);

LOGINFO("Left init light.");

} else {

LOGERROR("Failed to add component light.");

}

} else {

LOG_ALLOC_ERROR("New light object failed.");

}

SceneObject* pointLightObject = CG_NEW SceneObject(nullptr);

if (pointLightObject != nullptr) {

m_pointLightObject = pointLightObject;

Light* lightCom = pointLightObject->AddComponent<Light>();

if (lightCom != nullptr) {

const Vector3 POINT_LIGHT_COLOR(0.0, 10000.0f, 10000.0f);

lightCom->SetColor(POINT_LIGHT_COLOR);

lightCom->SetLightType(LIGHT_TYPE_POINT);

} else {

LOGERROR("Failed to add component light.");

}

} else {

LOG_ALLOC_ERROR("New light object failed.");

}

}

void MainApplication::ProcessInputEvent(const InputEvent *inputEvent)

{

BaseApplication::ProcessInputEvent(inputEvent);

LOGINFO("MainApplication ProcessInputEvent.");

EventSource source = inputEvent->GetSource();

if (source == EVENT_SOURCE_TOUCHSCREEN) {

const TouchInputEvent* touchEvent = reinterpret_cast<const TouchInputEvent *>(inputEvent);

if (touchEvent->GetAction() == TOUCH_ACTION_DOWN) {

// Action为触摸按下,将touch事件开始标志位置为true,计算移动和缩放数据。

LOGINFO("Action move start.");

m_touchBegin = true;

} else if (touchEvent->GetAction() == TOUCH_ACTION_MOVE) {

// Action为触摸移动,设置模型旋转、缩放参数

float touchPosDeltaX = touchEvent->GetPosX(touchEvent->GetTouchIndex()) - m_touchPosX;

float touchPosDeltaY = touchEvent->GetPosY(touchEvent->GetTouchIndex()) - m_touchPosY;

if (m_touchBegin) {

// 设置模型旋转

if (fabs(touchPosDeltaX) > 2.f) {

if (touchPosDeltaX > 0.f) {

m_objectRotation -= 2.f * m_deltaTime;

} else {

m_objectRotation += 2.f * m_deltaTime;

}

LOGINFO("Set rotation start.");

}

// 设置模型缩放

if (fabs(touchPosDeltaY) > 3.f) {

if (touchPosDeltaY > 0.f) {

m_objectScale -= 0.25f * m_deltaTime;

} else {

m_objectScale += 0.25f * m_deltaTime;

}

m_objectScale = std::min(1.25f, std::max(0.75f, m_objectScale));

LOGINFO("Set scale start.");

}

}

} else if (touchEvent->GetAction() == TOUCH_ACTION_UP) {

// Action为触摸抬起,则touch事件结束,标志位置为false

LOGINFO("Action up.");

m_touchBegin = false;

} else if (touchEvent->GetAction() == TOUCH_ACTION_CANCEL) {

LOGINFO("Action cancel.");

m_touchBegin = false;

}

m_touchPosX = touchEvent->GetPosX(touchEvent->GetTouchIndex());

m_touchPosY = touchEvent->GetPosY(touchEvent->GetTouchIndex());

}

}

void MainApplication::Update(float deltaTime)

{

LOGINFO("Update %f.", deltaTime);

m_deltaTime = deltaTime;

m_deltaAccumulate += m_deltaTime;

if (m_sceneObject != nullptr) {

// 模型旋转

m_sceneObject->SetRotation(Vector3(0.0, m_objectRotation, 0.0));

// 模型缩放

m_sceneObject->SetScale(SCENE_OBJECT_SCALE * m_objectScale);

}

const float POINT_HZ_X = 0.2f;

const float POINT_HZ_Y = 0.5f;

const float POINT_LIGHT_CIRCLE = 50.f;

if (m_pointLightObject) {

m_pointLightObject->SetPosition(Vector3(sin(m_deltaAccumulate * POINT_HZ_X) * POINT_LIGHT_CIRCLE,

sin(m_deltaAccumulate * POINT_HZ_Y) * POINT_LIGHT_CIRCLE + POINT_LIGHT_CIRCLE, cos(m_deltaAccumulate * POINT_HZ_X) * POINT_LIGHT_CIRCLE));

}

BaseApplication::Update(deltaTime);

}

干得好,您已经成功完成了Codelab并学到了:

您可以阅读下面链接,了解更多相关的信息。

相关文档

本Codelab中所用Demo源码下载地址如下: