1. Introduction

Overview

AV Pipeline Kit provides the video super-resolution plugin. The plugin converts low-resolution videos to high-resolution ones during the playback to improve video definition, which helps create better user experience for your app.

What You Will Create

In this codelab, you will call the video super-resolution plugin using a demo project to experience how to convert low-resolution videos to high-resolution ones during the playback.

What You Will Learn

In this codelab, you will learn how to:

- Create an app in AppGallery Connect.

- Call the video super-resolution plugin.

2. What You Will Need

Hardware Requirements

- A computer (desktop or laptop)

- A Huawei phone (with a USB cable) running EMUI 10.1 or later or HarmonyOS 2.0, which is used for debugging

- Hardware platform: Kirin 9000, Kirin 9000E, Kirin 990, Kirin 990E, or Kirin 980

Software Requirements

- Android Studio 4.0 or later

- SDK Platform 28 or later

- JDK 1.8 or later

Required Knowledge

Android app development basics

3. Integration Preparations

To integrate AV Pipeline Kit, you must complete the following preparations:

- Register as a developer.

- Create an app.

- Generate a signing certificate fingerprint.

- Configure the signing certificate fingerprint.

4. Integrating the HMS Core SDK

Before development, integrate the AV Pipeline SDK via the Maven repository into your development environment.

- Configure the Maven repository address for the HMS Core SDK.

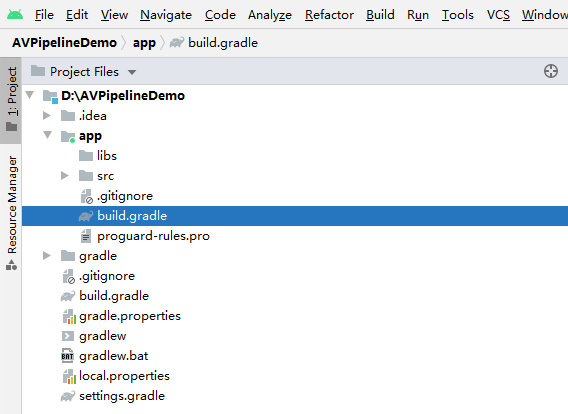

- Open the build.gradle file in the app directory of your project.

- Configure a build dependency on the video super-resolution capability in the dependencies block.

implementation ‘com.huawei.hms:avpipelinesdk:{version}'

implementation ‘com.huawei.hms:avpipeline-aidl:{version}'

implementation ‘com.huawei.hms:avpipeline-fallback-base:{version}'

implementation ‘com.huawei.hms:avpipeline-fallback-cvfoundry:{version}'

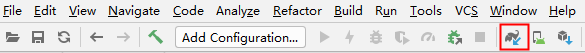

After completing the preceding configuration, click the synchronization icon on the toolbar to synchronize the Gradle files.

5. Development Procedure

Initializing the Video Player

- Compile message processing functions.

a) Define message codes.private static final int MSG_INIT_FWK = 1;

private static final int MSG_CREATE = 2;

private static final int MSG_PREPARE_DONE = 3;

private static final int MSG_RELEASE = 4;

private static final int MSG_START_DONE = 5;

private static final int MSG_STOP_DONE = 6;

private static final int MSG_SET_DURATION = 7;

private static final int MSG_GET_CURRENT_POS = 8;

private static final int MSG_UPDATE_PROGRESS_POS = 9; - Implement the onCreate method of Activity.

protected void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); setContentView(R.layout.activity_player); mPlayerThread = new HandlerThread(TAG); mPlayerThread.start(); if (mPlayerThread.getLooper() != null) { mPlayerHandler = new Handler(mPlayerThread.getLooper()) { @Override public void handleMessage(Message msg) { switch (msg.what) { case MSG_GET_CURRENT_POS: { getCurrentPos(); break; } case MSG_INIT_FWK: { initFwk(); break; } case MSG_CREATE: { mCountDownLatch = new CountDownLatch(1); startPlayMedia(); break; } case MSG_START_DONE: { onStartDone(); break; } case MSG_PREPARE_DONE: { onPrepareDone(); break; } case MSG_RELEASE: { stopPlayMedia(); mCountDownLatch.countDown(); break; } } super.handleMessage(msg); } }; initAllView(); initSeekBar(); mPlayerHandler.sendEmptyMessage(MSG_INIT_FWK); } } - Initialize the player layout and add callback functions for each widget.

protected void initAllView() { // Define a surface widget. mSurfaceVideo = findViewById(R.id.surfaceViewup); mVideoHolder = mSurfaceVideo.getHolder(); // Call the callback function to process the surface. mVideoHolder.addCallback(new SurfaceHolder.Callback() { // Callback executed when the surface is created. public void surfaceCreated(SurfaceHolder holder) { if (holder != mVideoHolder) { Log.i(TAG, "holder unmatch, create"); return; } Log.i(TAG, "holder match, create"); mPlayerHandler.sendEmptyMessage(MSG_CREATE); } // Callback executed when changes are made to the surface. public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) { } // Callback executed when the surface is destroyed. public void surfaceDestroyed(SurfaceHolder holder) { if (holder != mVideoHolder) { Log.i(TAG, "holder unmatch, destroy"); return; } Log.i(TAG, "holder match, destroy ... "); mPlayerHandler.sendEmptyMessage(MSG_RELEASE); try { mCountDownLatch.await(); } catch (InterruptedException e) { e.printStackTrace(); } Log.i(TAG, "holder match, destroy done "); } }); // Define the start or stop button. ImageButton btn = findViewById(R.id.startStopButton); // Add a tap event. btn.setOnClickListener(new View.OnClickListener() { public void onClick(View v) { // Check whether mPlayer is null. if (mPlayer == null) { return; } // Check the status of mPlayer and perform operations accordingly. if (mIsPlaying) { mIsPlaying = false; mPlayer.pause(); btn.setBackgroundResource(R.drawable.pause); mPlayer.setVolume(0.6f, 0.6f); } else { mIsPlaying = true; mPlayer.start(); btn.setBackgroundResource(R.drawable.play); } } }); // Define the mute button. ImageButton mutBtn = findViewById(R.id.muteButton); mutBtn.setOnClickListener(new View.OnClickListener() { public void onClick(View v) { if (mPlayer == null) { return; } MediaPlayer.VolumeInfo volumeInfo = mPlayer.getVolume(); boolean isMute = mPlayer.getMute(); if (isMute) { mutBtn.setBackgroundResource(R.drawable.volume); mPlayer.setVolume(volumeInfo.left, volumeInfo.right); isMute = false; mPlayer.setMute(isMute); } else { mutBtn.setBackgroundResource(R.drawable.mute); isMute = true; mPlayer.setMute(isMute); } } }); // Define the button for video selection. Button selectBtn = findViewById(R.id.selectFileBtn); selectBtn.setOnClickListener(new View.OnClickListener() { public void onClick(View v) { Log.i(TAG, "user is choosing file"); // Create an intent to redirect users to the file manager. Intent intent = new Intent(Intent.ACTION_OPEN_DOCUMENT); intent.setType("*/*"); intent.addCategory(Intent.CATEGORY_DEFAULT); intent.addFlags(Intent.FLAG_GRANT_READ_URI_PERMISSION); try { startActivityForResult(Intent.createChooser(intent, "Please select a file."), 1); } catch (ActivityNotFoundException e) { e.printStackTrace(); Toast.makeText(PlayerActivity.this, "Please install the file manager.", Toast.LENGTH_SHORT).show(); } } }); } private void initSeekBar() { // Define seekbar for playback and add a listener for it. mSeekBar = findViewById(R.id.seekBar); mSeekBar.setOnSeekBarChangeListener(new SeekBar.OnSeekBarChangeListener() { @Override public void onProgressChanged(SeekBar seekBar, int i, boolean b) { } @Override public void onStartTrackingTouch(SeekBar seekBar) { } @Override public void onStopTrackingTouch(SeekBar seekBar) { // Obtain the percentage. Log.d(TAG, "bar progress=" + seekBar.getProgress()); if (mDuration > 0 && mPlayer != null) { long seekToMsec = (int) (seekBar.getProgress() / 100.0 * mDuration); Log.d(TAG, "seekToMsec=" + seekToMsec); mPlayer.seek(seekToMsec); } } }); // Obtain the playback duration and total video duration. mTextCurMsec = findViewById(R.id.textViewNow); mTextTotalMsec = findViewById(R.id.textViewTotal); // Define seekBar for volume. mVolumeSeekBar = findViewById(R.id.volumeSeekBar); mAudio = (AudioManager) getSystemService(Context.AUDIO_SERVICE); mAudio.getStreamVolume(AudioManager.STREAM_MUSIC); int currentVolume = mAudio.getStreamVolume(AudioManager.STREAM_MUSIC); mVolumeSeekBar.setProgress(currentVolume); // Associate the display position of seekBar with volume. mVolumeSeekBar.setOnSeekBarChangeListener(new SeekBar.OnSeekBarChangeListener() { @Override public void onProgressChanged(SeekBar seekBar, int progress, boolean fromUser) { if (fromUser && (mPlayer != null)) { MediaPlayer.VolumeInfo volumeInfo = mPlayer.getVolume(); volumeInfo.left = (float) (progress * 0.1); volumeInfo.right = (float) (progress * 0.1); mPlayer.setVolume(volumeInfo.left, volumeInfo.right); } } @Override public void onStartTrackingTouch(SeekBar seekBar) { } @Override public void onStopTrackingTouch(SeekBar seekBar) { } }); }

b) Define the initFwk() function for processing MSG_INIT_FWK messages. The method is used to initialize AV Pipeline Kit.

private void initFwk() {

if (AVPLoader.isInit()) {

Log.d(TAG, "avp framework already inited");

return;

}

boolean ret = AVPLoader.initFwk(getApplicationContext());

if (ret) {

makeToastAndRecordLog(Log.INFO, "avp framework load succ");

} else {

makeToastAndRecordLog(Log.ERROR, "avp framework load failed");

}

}

c) Define the startPlayMedia() method for processing MSG_CREATE messages. The method is used to create a MediaPlayer instance and start video playback.

private void startPlayMedia() {

if (mFilePath == null) {

return;

}

Log.i(TAG, "start to play media file " + mFilePath);

mPlayer = MediaPlayer.create(getPlayerType());

if (mPlayer == null) {

return;

}

setGraph();

if (getPlayerType() == MediaPlayer.PLAYER_TYPE_AV) {

int ret = mPlayer.setVideoDisplay(mVideoHolder.getSurface());

if (ret != 0) {

makeToastAndRecordLog(Log.ERROR, "setVideoDisplay failed, ret=" + ret);

return;

}

}

int ret = mPlayer.setDataSource(mFilePath);

if (ret != 0) {

makeToastAndRecordLog(Log.ERROR, "setDataSource failed, ret=" + ret);

return;

}

mPlayer.setOnStartCompletedListener(new MediaPlayer.OnStartCompletedListener() {

@Override

public void onStartCompleted(MediaPlayer mp, int param1, int param2, MediaParcel parcel) {

if (param1 != 0) {

Log.e(TAG, "start failed, return " + param1);

} else {

mPlayerHandler.sendEmptyMessage(MSG_START_DONE);

}

}

});

mPlayer.setOnPreparedListener(new MediaPlayer.OnPreparedListener() {

@Override

public void onPrepared(MediaPlayer mp, int param1, int param2, MediaParcel parcel) {

if (param1 != 0) {

Log.e(TAG, "prepare failed, return " + param1);

} else {

mPlayerHandler.sendEmptyMessage(MSG_PREPARE_DONE);

}

}

});

mPlayer.setOnPlayCompletedListener(new MediaPlayer.OnPlayCompletedListener() {

@Override

public void onPlayCompleted(MediaPlayer mp, int param1, int param2, MediaParcel parcel) {

Message msgTime = mMainHandler.obtainMessage();

msgTime.obj = mDuration;

msgTime.what = MSG_UPDATE_PROGRESS_POS;

mMainHandler.sendMessage(msgTime);

Log.i(TAG, "sendMessage duration");

mPlayerHandler.sendEmptyMessage(MSG_RELEASE);

}

});

setListener();

mPlayer.prepare();

}

d) Define the onPrepareDone() method for processing MSG_PREPARE_DONE messages. The method is used to start MediaPlayer after the execution of prepare is complete.

private void onPrepareDone() {

Log.i(TAG, "onPrepareDone");

if (mPlayer == null) {

return;

}

mPlayer.start();

}

e) Define the onStartDone() method for processing MSG_START_DONE messages. The method is used to update the duration and playback progress on the app's playback page.

private void onStartDone() {

Log.i(TAG, "onStartDone");

mIsPlaying = true;

mDuration = mPlayer.getDuration();

Log.d(TAG, "duration=" + mDuration);

mMainHandler = new Handler(Looper.getMainLooper()) {

@Override

public void handleMessage(Message msg) {

switch (msg.what) {

case MSG_UPDATE_PROGRESS_POS: {

long currMsec = (long) msg.obj;

Log.i(TAG, "currMsec: " + currMsec);

mProgressBar.setProgress((int) (currMsec / (double) mDuration * 100));

mTextCurMsec.setText(msecToString(currMsec));

}

case MSG_SET_DURATION: {

mTextTotalMsec.setText(msecToString(mDuration));

break;

}

}

super.handleMessage(msg);

}

};

mPlayerHandler.sendEmptyMessage(MSG_GET_CURRENT_POS);

mMainHandler.sendEmptyMessage(MSG_SET_DURATION);

}

f) Define the getCurrentPos() method for processing MSG_GET_CURRENT_POS messages. The method is used to obtain the total media file duration.

private void getCurrentPos() {

long currMsec = mPlayer.getCurrentPosition();

if (currMsec < mDuration) {

Message msgTime = mPlayerHandler.obtainMessage();

msgTime.obj = currMsec;

msgTime.what = MSG_UPDATE_PROGRESS_POS;

mMainHandler.sendMessage(msgTime);

}

mPlayerHandler.sendEmptyMessageDelayed(MSG_GET_CURRENT_POS, 300);

}

g) Define the stopPlayMedia() method for processing MSG_RELEASE messages. The method is used to stop the playback and destroy MediaPlayer.

private void stopPlayMedia() {

if (mFilePath == null) {

return;

}

Log.i(TAG, "stopPlayMedia doing");

mIsPlaying = false;

if (mPlayer == null) {

return;

}

mPlayerHandler.removeMessages(MSG_GET_CURRENT_POS);

mPlayer.stop();

mPlayer.reset();

mPlayer.release();

mPlayer = null;

Log.i(TAG, "stopPlayMedia done");

}

Configuring the Video Super-Resolution Plugin

- The following shows the graph configuration file PlayerGraphCV.xml preset in AV Pipeline Kit.

<?xml version="1.0"?> <Nodes> <node id="0" name="AVDemuxer" mime="media/demuxer" type="source"> <!-- The original data stream is split into a video stream for video decoding on node 1 and an audio stream for audio decoding on node 2. --> <next id="1"/> <next id="2"/> </node> <!-- Node 1 transmits images decoded from the stream to node 3, with next id set to 3, that is, the CVFilter node. --> <node id="1" name="MediaCodecDecoder" mime="video/avc" type="filter"> <next id="3"/> </node> <node id="2" name="FFmpegNode" mime="audio/aac" type="filter"> <next id="4"/> </node> <!-- Set the node name, MIME, and type by referring to the sample code. Each node ID must be unique. It is recommended that you increment a node ID by 1 for the ID of the next node. --> <node id="3" name="CVFilter" mime="video/cv-filter" type="filter"> <!-- next id indicates the next destination node of video stream. In the following example, the next destination is the node for display. --> <next id="5" /> </node> <node id="4" name="AudioSinkOpenSL" mime="audio/sink" type="sink"> </node> <node id="5" name="VideoSinkBasic" mime="video/sink" type="sink"> </node> </Nodes> - Pass the absolute path of the configuration file as a key-value pair to MediaPlayer through setParameter, with the key being MediaMeta.MEDIA_GRAPH_PATH. Set MediaMeta.MEDIA_ENABLE_CV to 1 to enable CVFilter. You can encapsulate the settings in a custom function and call the function as required. The following takes the function setGraph as an example.

@Override protected void setGraph() { MediaMeta meta = new MediaMeta(); meta.setString(MediaMeta.MEDIA_GRAPH_PATH, getExternalFilesDir(null).getPath() + "/PlayerGraphCV.xml"); meta.setInt32(MediaMeta.MEDIA_ENABLE_CV, 1); mPlayer.setParameter(meta); } - Control the video super-resolution function by setting MediaMeta.MEDIA_ENABLE_CV to 1 (enabled) or 0 (disabled) and passing the value to MediaPlayer through the setParameter API.

meta.setInt32(MediaMeta.MEDIA_ENABLE_CV, 0); mPlayer.setParameter(meta);

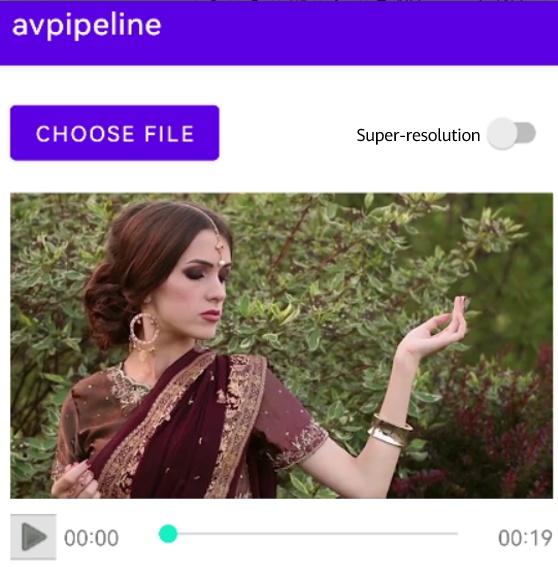

Video effect with super-resolution disabled:

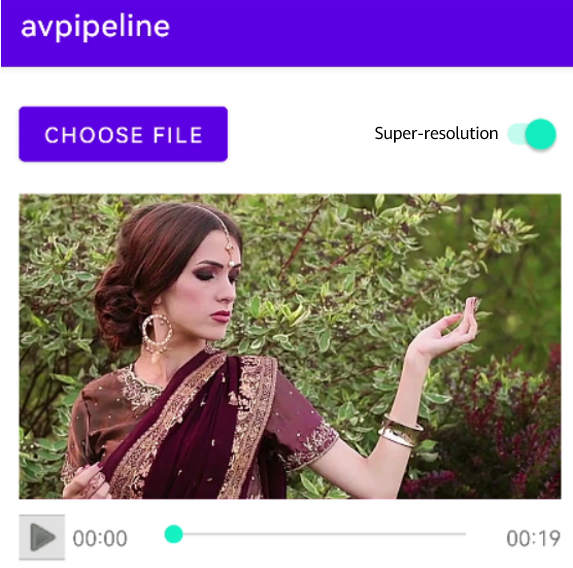

Video effect with super-resolution enabled:

6. Congratulations

Well done. You have successfully completed this codelab and learned how to:

- Create an app in AppGallery Connect.

- Integrate the video super-resolution plugin of AV Pipeline Kit.

- Call the video super-resolution plugin of AV Pipeline Kit.

7. Reference

For more information, please click the following link:

Related documents

To download the sample code, please click the button below: