The CG Plug-in System Framework is a key component of the Vulkan-based high-performance CG Rendering Framework. It provides plug-in management and plug-in development standards that allows you to extend and enhance the capabilities of CG Kit, enabling easy and efficient development.

You need to perform the following operations:

In this codelab, you will use the demo project to call the CG Kit APIs provided by Huawei. Through this demo project, you will:

To integrate HUAWEI CG Kit, you must complete the following preparations:

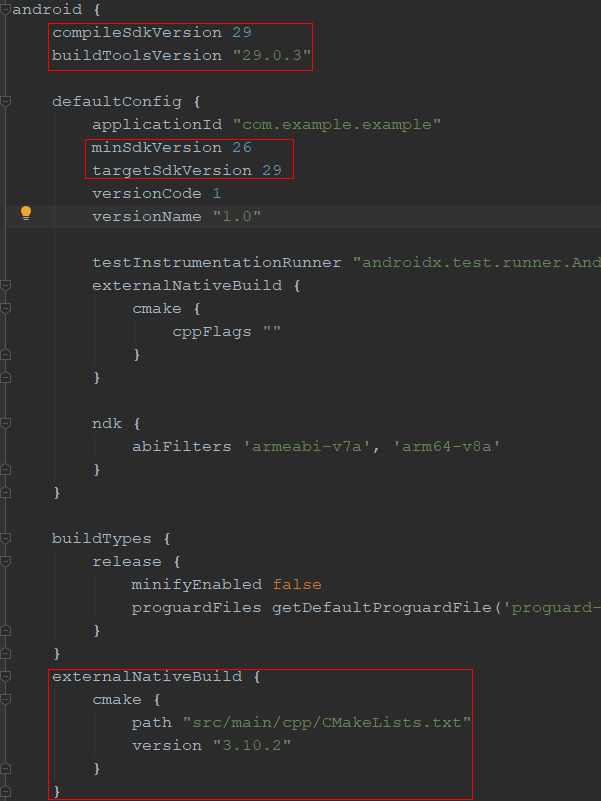

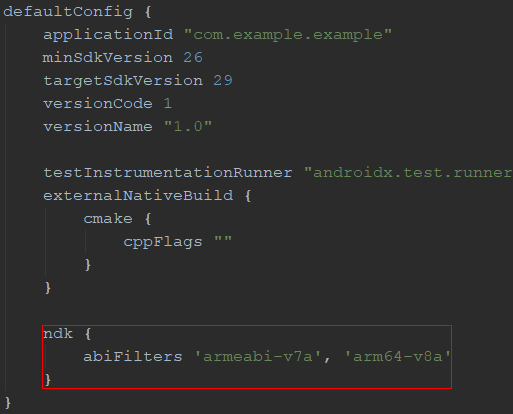

Configure the NDK ABI filter.

cmake_minimum_required(VERSION 3.4.1)

include_directories(

${CMAKE_SOURCE_DIR}/include/CGRenderingFramework )

include_directories(

${CMAKE_SOURCE_DIR}/include/MainApplication

${CMAKE_SOURCE_DIR}/include/OSRPlugin )

add_library(

main-lib

SHARED

source/Main.cpp

source/MainApplication.cpp

source/OSRPlugin.cpp)

ADD_LIBRARY(

cgkit

SHARED

IMPORTED)

set_target_properties(cgkit

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../../../libs/${ANDROID_ABI}/libcgkit.so

)

SET(

VULKAN_INCLUDE_DIR

"$ENV{VULKAN_SDK}/include")

#"${ANDROID_NDK}/sources/third_party/vulkan/src/include")

include_directories(${VULKAN_INCLUDE_DIR})

SET(

NATIVE_APP_GLUE_DIR

"${ANDROID_NDK}/sources/android/native_app_glue")

FILE(

GLOB NATIVE_APP_GLUE_FILLES

"${NATIVE_APP_GLUE_DIR}/*.c"

"${NATIVE_APP_GLUE_DIR}/*.h")

ADD_LIBRARY(native_app_glue

STATIC

${NATIVE_APP_GLUE_FILLES})

TARGET_INCLUDE_DIRECTORIES(

native_app_glue

PUBLIC

${NATIVE_APP_GLUE_DIR})

find_library(

log-lib

log )

target_link_libraries(

main-lib

cgkit

native_app_glue

android

${log-lib} )

SET(

CMAKE_SHARED_LINKER_FLAGS

"${CMAKE_SHARED_LINKER_FLAGS} -u ANativeActivity_onCreate")

width=xxx (width of each square texture, in pixels)

height=xxx (height of each square texture, in pixels)

depth=xxx (texture pixel depth of each square)

mipmap=xxx (number of mipmap layers)

face=xxx (number of cube squares)

channel=xxx (Number of color channels (RGBA) of each square texture, for example, 4)

suffix=xxx (format of each square texture, for example, .png)

cubeface_neg_xi (left side of the cube)

cubeface_neg_yi (bottom of the cube)

cubeface_neg_zi (front side of the cube)

cubeface_pos_xi (right side of the cube)

cubeface_pos_yi (top of the cube)

cubeface_pos_zi (back side of the cube)

layout(location=0) in vec3 position;

layout(location=1) in vec2 texcoord;

layout(location=2) in vec3 normal;

layout(location=3) in vec3 tangent;

layout(set=0, binding=0) uniform GlobalUniform

{

mat4 modelViewProjection;

mat4 model;

mat4 view;

mat4 projection;

mat4 projectionOrtho;

mat4 viewProjection;

mat4 viewProjectionInv;

mat4 viewProjectionOrtho;

vec4 resolution;

vec4 cameraPosition;

}

#define MAX_FORWARD_LIGHT_COUNT = 16

#define DIRECTIONAL_LIGHT 0

#define POINT_LIGHT 1

#define SPOT_LIGHT 2

struct LightData

{

// xyz indicates the color, and w indicates the strength.

vec4 color;

// xyz indicates the position.

vec4 position;

// xyz indicates the direction.

vec4 direction;

// x indicates the type.

vec4 type_angle;

}

layout(set=0, binding=6) uniform LightsInfos

{

LightData lights[MAX_FORWARD_LIGHT_COUNT];

// Number of light sources

uint count;

}

albedoTexture (albedo texture, corresponding to TEXTURE_TYPE_ALBEDO of class Material)

normalTexture (normal texture, corresponding to TEXTURE_TYPE_NORMAL of class Material)

pbrTexture (PBR texture (channel x for ambient occlusion (AO), channel y for roughness, and channel z for metallic), corresponding to TEXTURE_TYPE_PBRTEXTURE of class Material)

emissionTexture (emission map, corresponding to TEXTURE_TYPE_EMISSION of class Material)

envTexture (environment map, corresponding to TEXTURE_TYPE_ENVIRONMENTMAP of class Material)

void android_main(android_app* state)

{

// Home page of instantiation sample demo.

auto app = CreateMainApplication();

if (app == nullptr) {

return;

}

// Start platform rendering.

app->Start((void*)(state));

// Start the main rendering loop.

app->MainLoop();

CG_SAFE_DELETE(app);

}

// The APK object is instantiated in MainApplication.cpp.

BaseApplication *CreateMainApplication()

{

return new MainApplication();

}

void MainApplication::InitScene()

{

LOGINFO("MainApplication InitScene.");

BaseApplication::InitScene();

// step 1:Add camera

LOGINFO("Enter init main camera.");

SceneObject *cameraObj = CG_NEW SceneObject(nullptr);

if (cameraObj == nullptr) {

LOGERROR("Failed to create camera object.");

return;

}

Camera *mainCamera = cameraObj->AddComponent<Camera>();

if (mainCamera == nullptr) {

CG_SAFE_DELETE(cameraObj);

LOGERROR("Failed to create main camera.");

return;

}

const f32 FOV = 60.f;

const f32 NEAR = 0.1f;

const f32 FAR = 500.0f;

const Vector3 EYE_POSITION(0.0f, 0.0f, 0.0f);

cameraObj->SetPosition(EYE_POSITION);

mainCamera->SetProjectionType(ProjectionType::PROJECTION_TYPE_PERSPECTIVE);

mainCamera->SetProjection(FOV, gCGKitInterface.GetAspectRadio(), NEAR, FAR);

mainCamera->SetViewport(0, 0, gCGKitInterface.GetScreenWidth(), gCGKitInterface.GetScreenHeight());

gSceneManager.SetMainCamera(mainCamera);

}

void MainApplication::InitScene()

{

// step 2:Load default model

String modelName = "models/Avatar/body.obj"; // Replace the value with the directory that stores the generated model data.

Model *model = static_cast<Model *>(gResourceManager.Get(modelName));

// step 3:New SceneObject and add SceneObject to SceneManager

MeshRenderer *meshRenderer = nullptr;

SceneObject *object = gSceneManager.CreateSceneObject();

if (object != nullptr) {

// step 4:Add MeshRenderer Component to SceneObject

meshRenderer = object->AddComponent<MeshRenderer>();

// step 5:Relate model's submesh to MeshRenderer

if (meshRenderer != nullptr && model != nullptr && model->GetMesh() != nullptr) {

meshRenderer->SetMesh(model->GetMesh());

} else {

LOGERROR("Failed to add mesh renderer.");

}

} else {

LOGERROR("Failed to create scene object.");

}

if (model != nullptr) {

const Mesh *mesh = model->GetMesh();

if (mesh != nullptr) {

LOGINFO("Model submesh count %d.", mesh->GetSubMeshCount());

LOGINFO("Model vertex count %d.", mesh->GetVertexCount());

// step 6:Load Texture

String texAlbedo = "models/Avatar/Albedo_01.png";

String texNormal = "models/Avatar/Normal_01.png";

String texPbr = "models/Avatar/Pbr_01.png";

String texEmissive = "shaders/pbr_brdf.png";

u32 subMeshCnt = mesh->GetSubMeshCount();

for (u32 i = 0; i < subMeshCnt; ++i) {

SubMesh *subMesh = mesh->GetSubMesh(i);

if (subMesh == nullptr) {

LOGERROR("Failed to get submesh.");

continue;

}

// step 7:Add Material

Material *material = dynamic_cast<Material *>(

gResourceManager.Get(ResourceType::RESOURCE_TYPE_MATERIAL));

if (material == nullptr) {

LOGERROR("Failed to create new material.");

return;

}

material->Init();

material->SetSubMesh(subMesh);

material->SetTexture(TextureType::TEXTURE_TYPE_ALBEDO, texAlbedo);

material->SetSamplerParam(TextureType::TEXTURE_TYPE_ALBEDO, SAMPLER_FILTER_BILINEAR, SAMPLER_FILTER_BILINEAR,

SAMPLER_MIPMAP_BILINEAR, SAMPLER_ADDRESS_CLAMP);

material->SetTexture(TextureType::TEXTURE_TYPE_NORMAL, texNormal);

material->SetSamplerParam(TextureType::TEXTURE_TYPE_NORMAL, SAMPLER_FILTER_BILINEAR, SAMPLER_FILTER_BILINEAR,

SAMPLER_MIPMAP_BILINEAR, SAMPLER_ADDRESS_CLAMP);

material->SetTexture(TextureType::TEXTURE_TYPE_PBRTEXTURE, texPbr);

material->SetSamplerParam(TextureType::TEXTURE_TYPE_PBRTEXTURE, SAMPLER_FILTER_BILINEAR, SAMPLER_FILTER_BILINEAR,

SAMPLER_MIPMAP_BILINEAR, SAMPLER_ADDRESS_CLAMP);

material->SetTexture(TextureType::TEXTURE_TYPE_EMISSION, texEmissive);

material->SetSamplerParam(TextureType::TEXTURE_TYPE_EMISSION, SAMPLER_FILTER_BILINEAR, SAMPLER_FILTER_BILINEAR,

SAMPLER_MIPMAP_BILINEAR, SAMPLER_ADDRESS_CLAMP);

material->SetTexture(TextureType::TEXTURE_TYPE_ENVIRONMENTMAP, m_envMap);

material->SetSamplerParam(TextureType::TEXTURE_TYPE_ENVIRONMENTMAP, SAMPLER_FILTER_BILINEAR, SAMPLER_FILTER_BILINEAR,

SAMPLER_MIPMAP_BILINEAR, SAMPLER_ADDRESS_CLAMP);

material->AttachShaderStage(ShaderStageType::SHADER_STAGE_TYPE_VERTEX, "shaders/pbr_vert.spv");

material->AttachShaderStage(ShaderStageType::SHADER_STAGE_TYPE_FRAGMENT, "shaders/pbr_frag.spv");

material->SetCullMode(CULL_MODE_NONE);

material->SetDepthTestEnable(true);

material->SetDepthWriteEnable(true);

material->Create();

meshRenderer->SetMaterial(i, material);

}

} else {

LOGERROR("Failed to get mesh.");

}

} else {

LOGERROR("Failed to load model.");

}

m_sceneObject = object;

if (m_sceneObject != nullptr){

m_sceneObject->SetPosition(SCENE_OBJECT_POSITION);

m_sceneObject->SetScale(SCENE_OBJECT_SCALE);

}

m_objectRotation = Math::PI;

}

void MainApplication::InitScene()

{

// step 8:create sky box

SceneObject* skyboxObj = CreateSkybox();

if(skyboxObj != nullptr) {

skyboxObj->SetScale(Vector3(100.f, 100.f, 100.f));

}

}

SceneObject* MainApplication::CreateSkybox()

{

String modelName = "models/test-cube.obj";

Model *model = static_cast<Model *>(gResourceManager.Get(modelName));

const Mesh *mesh = model->GetMesh();

// load Texture

u32 subMeshCnt = mesh->GetSubMeshCount();

// Add to scene

SceneObject *sceneObj = gSceneManager.CreateSceneObject();

MeshRenderer *meshRenderer = sceneObj->AddComponent<MeshRenderer>();

meshRenderer->SetMesh(model->GetMesh());

for (u32 i = 0; i < subMeshCnt; ++i) {

SubMesh *subMesh = mesh->GetSubMesh(i);

// add Material

Material *material = dynamic_cast<Material *>(gResourceManager.Get(ResourceType::RESOURCE_TYPE_MATERIAL));

material->Init();

material->SetSubMesh(subMesh);

material->SetTexture(TextureType::TEXTURE_TYPE_ENVIRONMENTMAP, m_envMap);

material->SetSamplerParam(TextureType::TEXTURE_TYPE_ENVIRONMENTMAP, SAMPLER_FILTER_BILINEAR, SAMPLER_FILTER_BILINEAR,

SAMPLER_MIPMAP_BILINEAR, SAMPLER_ADDRESS_CLAMP);

material->AttachShaderStage(ShaderStageType::SHADER_STAGE_TYPE_VERTEX, "shaders/sky_vert.spv");

material->AttachShaderStage(ShaderStageType::SHADER_STAGE_TYPE_FRAGMENT, "shaders/sky_frag.spv");

material->SetCullMode(CULL_MODE_NONE);

material->SetDepthTestEnable(true);

material->SetDepthWriteEnable(true);

material->Create();

meshRenderer->SetMaterial(i, material);

}

sceneObj->SetScale(Vector3(1.f, 1.f, 1.f));

sceneObj->SetPosition(Vector3(0.0f, 0.0f, 0.0f));

sceneObj->SetRotation(Vector3(0.0f, 0.0, 0.0));

return sceneObj;

}

void MainApplication::InitScene()

{

// step 9:Add light

LOGINFO("Enter init light.");

SceneObject *lightObject = CG_NEW SceneObject(nullptr);

if (lightObject != nullptr) {

Light *lightCom = lightObject->AddComponent<Light>();

if (lightCom != nullptr) {

lightCom->SetColor(Vector3::ONE);

const Vector3 DIRECTION_LIGHT_DIR(0.1f, 0.2f, 1.0f);

lightCom->SetDirection(DIRECTION_LIGHT_DIR);

lightCom->SetLightType(LIGHT_TYPE_DIRECTIONAL);

LOGINFO("Left init light.");

} else {

LOGERROR("Failed to add component light.");

}

} else {

LOG_ALLOC_ERROR("New light object failed.");

}

SceneObject *pointLightObject = CG_NEW SceneObject(nullptr);

if (pointLightObject != nullptr) {

m_pointLightObject = pointLightObject;

Light *lightCom = pointLightObject->AddComponent<Light>();

if (lightCom != nullptr) {

const Vector3 POINT_LIGHT_COLOR(0.0, 10000.0f, 10000.0f);

lightCom->SetColor(POINT_LIGHT_COLOR);

lightCom->SetLightType(LIGHT_TYPE_POINT);

} else {

LOGERROR("Failed to add component light.");

}

} else {

LOG_ALLOC_ERROR("New light object failed.");

}

}

void MainApplication::ProcessInputEvent(const InputEvent *inputEvent)

{

BaseApplication::ProcessInputEvent(inputEvent);

LOGINFO("MainApplication ProcessInputEvent.");

EventSource source = inputEvent->GetSource();

if (source == EVENT_SOURCE_TOUCHSCREEN) {

const TouchInputEvent *touchEvent = reinterpret_cast<const TouchInputEvent *>(inputEvent);

if (touchEvent == nullptr) {

LOGERROR("Failed to get touch event.");

return;

}

if (touchEvent->GetAction() == TOUCH_ACTION_DOWN) {

// The action is "touch down". Set the start flag of the touch event to true and calculate the rotation and scaling coordinates.

LOGINFO("Action move start.");

m_touchBegin = true;

if (!m_pluginTested && singleTapDetected) {

int64_t lastTouchDownTime = GetCurrentTime();

int64_t preTouchUpTime = nowTime;

ProcessSingleTap(lastTouchDownTime, preTouchUpTime);

}

} else if (touchEvent->GetAction() == TOUCH_ACTION_MOVE) {

// The action is "touch move". Set the model rotation and scaling parameters.

float touchPosDeltaX = touchEvent->GetPosX(touchEvent->GetTouchIndex()) - m_touchPosX;

float touchPosDeltaY = touchEvent->GetPosY(touchEvent->GetTouchIndex()) - m_touchPosY;

if (m_touchBegin) {

// Set model rotation.

if (fabs(touchPosDeltaX) > 2.f) {

if (touchPosDeltaX > 0.f) {

m_objectRotation -= 2.f * m_deltaTime;

} else {

m_objectRotation += 2.f * m_deltaTime;

}

LOGINFO("Set rotation start.");

}

// Set model scaling.

if (fabs(touchPosDeltaY) > 3.f) {

if (touchPosDeltaY > 0.f) {

m_objectScale -= 0.25f * m_deltaTime;

} else {

m_objectScale += 0.25f * m_deltaTime;

}

m_objectScale = min(1.25f, max(0.75f, m_objectScale));

LOGINFO("Set scale start.");

}

}

} else if (touchEvent->GetAction() == TOUCH_ACTION_UP) {

// The action is "touch up", the touch event ends and set the flag bit to false.

LOGINFO("Action up.");

m_touchBegin = false;

} else if (touchEvent->GetAction() == TOUCH_ACTION_CANCEL) {

LOGINFO("Action cancel.");

m_touchBegin = false;

if (!m_pluginTested) {

singleTapDetected = true;

nowTime = GetCurrentTime();

}

}

m_touchPosX = touchEvent->GetPosX(touchEvent->GetTouchIndex());

m_touchPosY = touchEvent->GetPosY(touchEvent->GetTouchIndex());

}

}

void MainApplication::Update(float deltaTime)

{

LOGINFO("Update %f.", deltaTime);

m_deltaTime = deltaTime;

m_deltaAccumulate += m_deltaTime;

if (m_sceneObject != nullptr) {

// model rotation

m_sceneObject->SetRotation(Vector3(0.0, m_objectRotation, 0.0));

// model scaling

m_sceneObject->SetScale(SCENE_OBJECT_SCALE * m_objectScale);

}

const float POINT_HZ_X = 0.2f;

const float POINT_HZ_Y = 0.5f;

const float POINT_LIGHT_CIRCLE = 50.f;

if (m_pointLightObject) {

m_pointLightObject->SetPosition(Vector3(sin(m_deltaAccumulate * POINT_HZ_X) * POINT_LIGHT_CIRCLE,

sin(m_deltaAccumulate * POINT_HZ_Y) * POINT_LIGHT_CIRCLE + POINT_LIGHT_CIRCLE, cos(m_deltaAccumulate * POINT_HZ_X) * POINT_LIGHT_CIRCLE));

}

BaseApplication::Update(deltaTime);

}

void MainApplication::ProcessSingleTap(int64_t lastTouchDownTime, int64_t preTouchUpTime)

{

int64_t deltaTimeMs = abs(lastTouchDownTime - preTouchUpTime);

const int doubleTapInterval = 500;

if (deltaTimeMs < doubleTapInterval) {

OSRPlugin plugin;

plugin.PluginTest();

m_pluginTested = true;

}

}

bool OSRPlugin::PluginInit()

{

std::vector<String> pluginList;

// Initialize Plug-in System Framework.

gPluginManager.Initialize();

// Obtain the plug-in list.

pluginList = gPluginManager.GetPluginList();

if (find(pluginList.begin(), pluginList.end(), pluginName) == pluginList.end()) {

LOGERROR("Cannot find plugin %s!", pluginName.c_str());

gPluginManager.Uninitialize();

return false;

}

plugin = gPluginManager.LoadPlugin(pluginName); // Load a plug-in.

if (plugin == nullptr) {

LOGERROR("No plugin loaded!");

gPluginManager.Uninitialize();

return false;

}

LOGINFO("Load Plugin %s successfully!", pluginName.c_str());

// Check whether the plug-in is activated.

if (!plugin->IsPluginActive()) {

LOGERROR("Plugin %s was not activated!", pluginName.c_str());

PluginDeInit();

return false;

}

return true;

}

bool OSRPlugin::ImageEnhancingSync(BufferDescriptor& inBuffer, BufferDescriptor& outBuffer)

{

Param paramIn, paramOut;

const float sharpness = 0.9;

const bool toneMapping = true;

const int timeOut = 10000;

Param pi0;

// set opcode

pi0.Set<s32>(SYNC_IMAGE_ENHANCING);

// set val

Param pi1;

pi1.Set<void *>(static_cast<void *>(&inBuffer));

Param pi2;

pi2.Set<f32>(sharpness);

Param pi3;

pi3.Set<bool>(toneMapping);

Param pi4;

pi4.Set<s32>(timeOut);

// set paramIn

paramIn.Set(0, pi0);

paramIn.Set(1, pi1);

paramIn.Set(2, pi2);

paramIn.Set(3, pi3);

paramIn.Set(4, pi4);

Param po0;

// set val

po0.Set<void *>(static_cast<void *>(&outBuffer));

// set paramOut

paramOut.Set(0, po0);

// call fn:SYNC_IMAGE_ENHANCING

// Call the plug-in execution API.

bool success = plugin->Execute(paramIn, paramOut);

if (success) {

LOGINFO("Test Sync ImageEnhancing success");

return true;

} else {

LOGERROR("Test Sync ImageEnhancing failed");

return false;

}

}

void OSRPlugin::PluginDeInit()

{

// Unload the plug-in.

gPluginManager.UnloadPlugin(pluginName);

// Deinitialize Plug-in System Framework.

gPluginManager.Uninitialize();

}

Well done. You have successfully completed this codelab and learned how to:

For more information, click the following links:

Related documents

Download the demo source code used in this codelab from the following address: