HUAWEI HiAI is an open artificial intelligence (AI) capability platform for smart devices, which adopts a "chip-device-cloud" architecture, opening up chip, app, and service capabilities for a fully intelligent ecosystem. It assists you in delivering a better smart app experience for users, by fully leveraging Huawei's powerful AI processing capabilities.

HiAI Foundation APIs constitute an AI computing library of a mobile computing platform, enabling you to efficiently compile AI apps that can run on mobile devices. You can run neural network models on mobile devices and call HiAI Foundation APIs to accelerate computing. With the default images of mobile devices, you can complete integration, development, and validation without installing the HiAI Foundation APIs. HiAI Foundation APIs are released as a unified binary file. They accelerate the computing of neural networks by using the HiAI heterogeneous computing platform. Currently, these APIs can only run on a Kirin system on a chip (SoC).

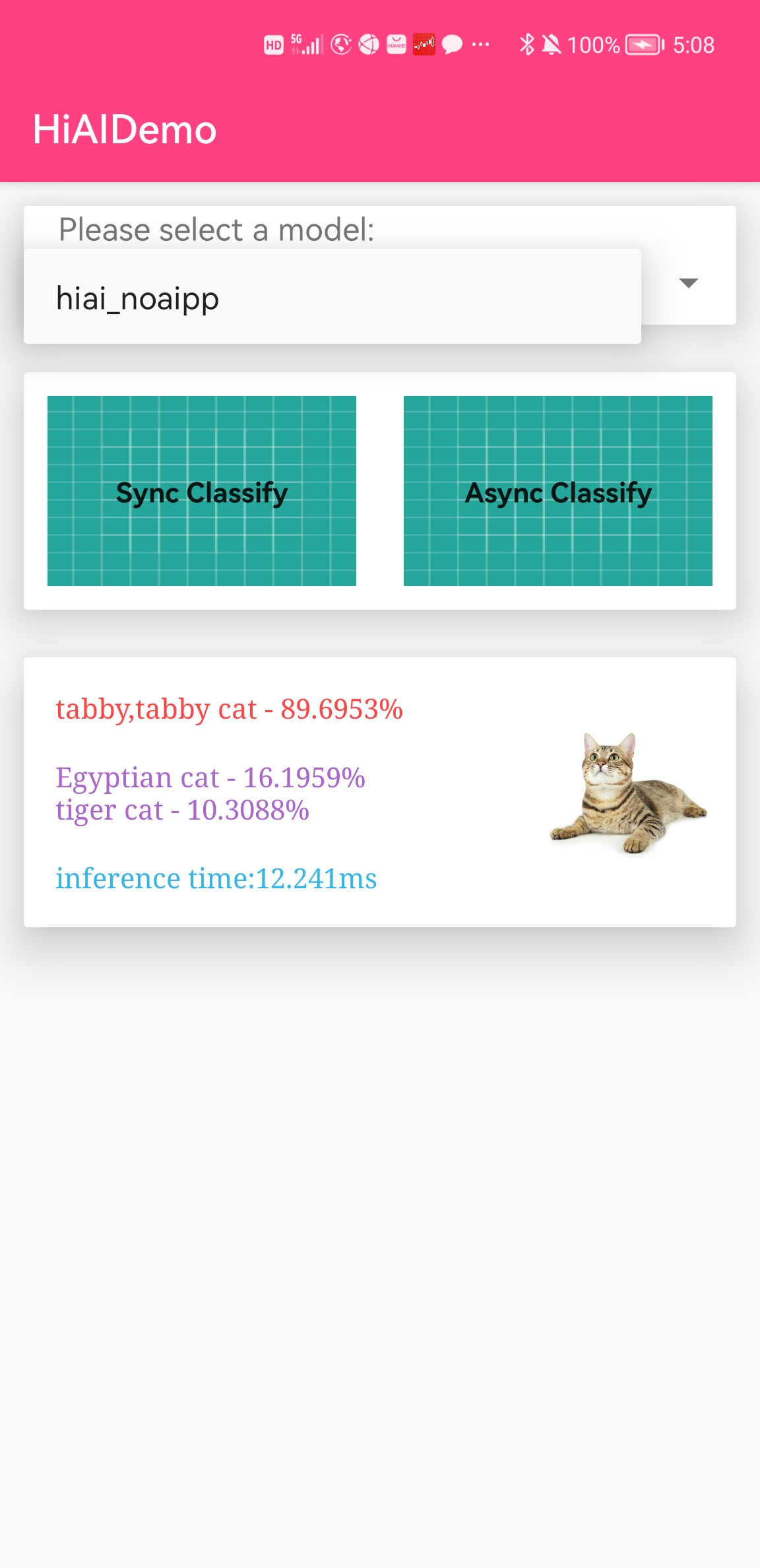

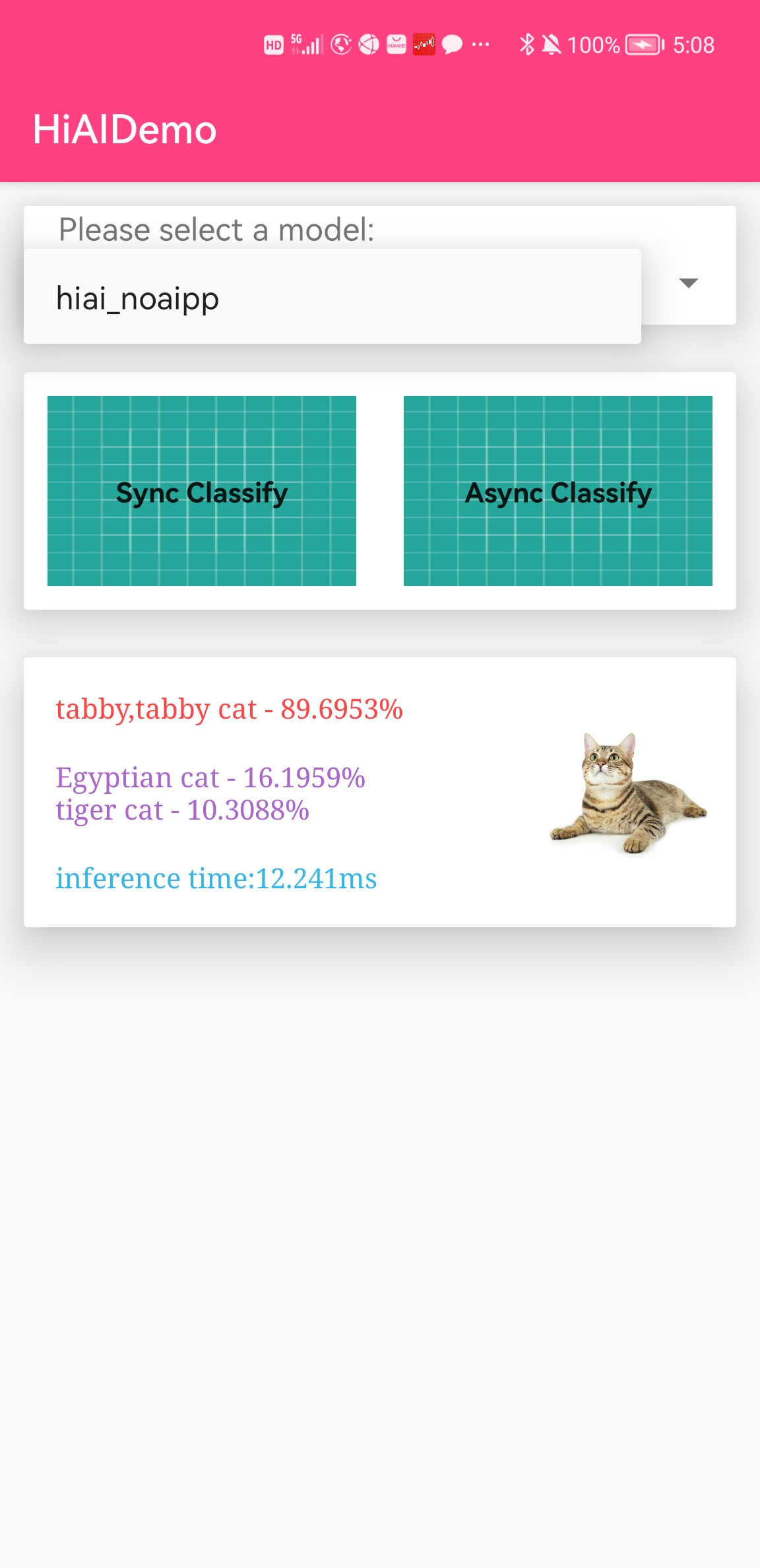

In this codelab, you can use the AI capabilities of HiAI Foundation to build a simple Android app that can classify and sort images. A demo app is as follows:

Kirin Version | Kirin 810 | Kirin 990 |

Phone Model | Nova 5, Nova 5 Pro, Honor 20S, and Honor 9X Pro | Mate 30, Mate 30 Pro, Honor V30 Pro, P40, and P40 Pro |

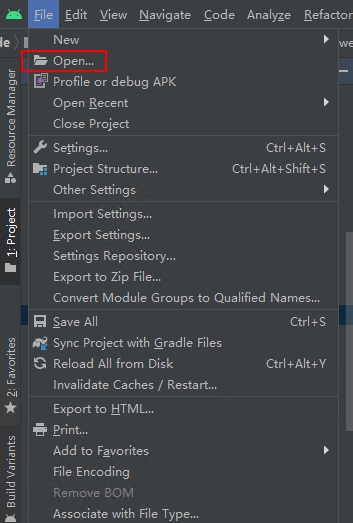

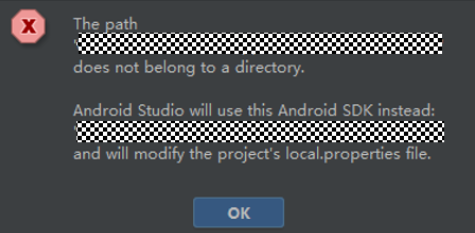

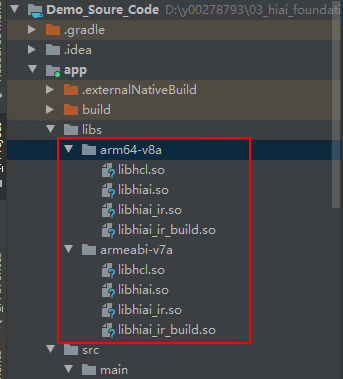

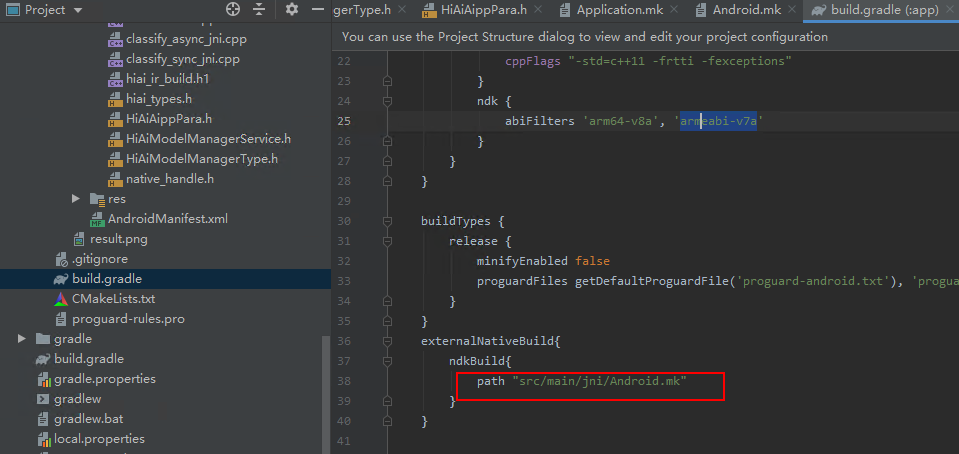

To integrate HiAI Foundation, prepare as follows:

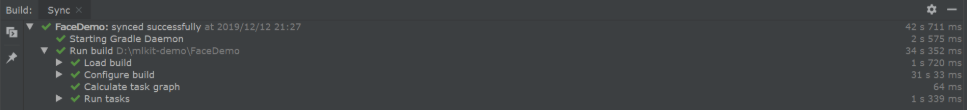

If the following information is displayed, the project is successfully synchronized.

If Unknown Device or No device is displayed, run the following command in the CMD window to restart the ADB service:

adb kill-server and adb start-server

LOCAL_PATH := $(call my-dir)

DDK_LIB_PATH := $(LOCAL_PATH)/../../../libs/$(TARGET_ARCH_ABI)

include $(CLEAR_VARS)

LOCAL_MODULE := hiai_ir

LOCAL_SRC_FILES := $(DDK_LIB_PATH)/libhiai_ir.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := hiai

LOCAL_SRC_FILES := $(DDK_LIB_PATH)/libhiai.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := hcl

LOCAL_SRC_FILES := $(DDK_LIB_PATH)/libhcl.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := hiai_ir_build

LOCAL_SRC_FILES := $(DDK_LIB_PATH)/libhiai_ir_build.so

include $(PREBUILT_SHARED_LIBRARY)

include $(CLEAR_VARS)

LOCAL_MODULE := hiaijni

LOCAL_SRC_FILES := \

classify_sync_jni.cpp \

classify_async_jni.cpp \

buildmodel.cpp

LOCAL_SHARED_LIBRARIES := hiai_ir \

hiai \

hcl \

hiai_ir_build \

LOCAL_LDFLAGS := -L$(DDK_LIB_PATH)

LOCAL_LDLIBS += \

-llog \

-landroid

CPPFLAGS=-stdlib=libstdc++ LDLIBS=-lstdc++

LOCAL_CFLAGS += -std=c++14

include $(BUILD_SHARED_LIBRARY)

APP_ABI := arm64-v8a armeabi-v7a

APP_STL := c++_shared

Copy the offline model and label file (.om and labels_caffe.txt files in src/main/assets/ of the Android source code directory provided by the DDK) to the /src/main/assets directory of your project. (These files have already been copied to the target directory in the demo, so you can skip this step.)

HiAI Foundation provides the model inference capability for you to integrate, which involves both model pre-processing and model inference. The DDK provides synchronous and asynchronous APIs. The following takes synchronous APIs as an example to demonstrate the whole process of using open AI capabilities of HiAI Foundation. Files used in the development:

protected void initModels()

{

File dir = getDir("models", Context.MODE_PRIVATE);

String path = dir.getAbsolutePath() + File.separator;

ModelInfo model_2 = new ModelInfo();

model_2.setModelSaveDir(path);

model_2.setUseAIPP(false);

model_2.setOfflineModel("hiai_noaipp.om");

model_2.setOfflineModelName("hiai_noaipp");

model_2.setOnlineModelLabel("labels_caffe.txt");

demoModelList.add(model_2);

}

Create and initialize a model manager instance.

classify_sync_jni.cpp

shared_ptr<AiModelMngerClient> LoadModelSync(vector<string> names, vector<string> modelPaths, vector<bool> Aipps)

{

/*Create a model manager instance.*/

shared_ptr<AiModelMngerClient> clientSync = make_shared<AiModelMngerClient>();

IF_BOOL_EXEC(clientSync == nullptr, LOGE("[HIAI_DEMO_SYNC] Model Manager Client make_shared error.");

return nullptr);

/*Initialize the model manager.*/

int ret = clientSync->Init(nullptr);

IF_BOOL_EXEC(ret != SUCCESS, LOGE("[HIAI_DEMO_SYNC] Model Manager Init Failed."); return nullptr);

......

return clientSync;

}

int LoadSync(vector<string>& names, vector<string>& modelPaths, shared_ptr<AiModelMngerClient>& client)

{

int ret;

vector<shared_ptr<AiModelDescription>> modelDescs;

vector<MemBuffer*> memBuffers;

/*C**AiModelBuilder**elBuilder object.*/

shared_ptr<AiModelBuilder> modelBuilder = make_shared<AiModelBuilder>(client);

if (modelBuilder == nullptr) {

LOGI("[HIAI_DEMO_SYNC] creat modelBuilder failed.");

return FAILED;

}

for (size_t i = 0; i < modelPaths.size(); ++i) {

string modelPath = modelPaths[i];

string modelName = names[i];

g_syncNameToIndex[modelName] = i;

// We can achieve the optimization by loading model from OM file.

LOGI("[HIAI_DEMO_SYNC] modelpath is %s\n.", modelPath.c_str());

/***Membuffer**Membuffer object, and pass the offline model path u**InputMemBufferCreate**ferCreate functio**builder**e builder object.*/

MemBuffer* buffer = modelBuilder->InputMemBufferCreate(modelPath);

if (buffer == nullptr) {

LOGE("[HIAI_DEMO_SYNC] cannot find the model file.");

return FAILED;

}

memBuffers.push_back(buffer);

string modelNameFull = string(modelName) + string(".om");

shared_ptr<AiModelDescription> desc =

/*Set the model name, execution mode, and the device on which the execution will be performed.*/

make_shared<AiModelDescription>(modelNameFull, AiModelDescription_Frequency_HIGH, HIAI_FRAMEWORK_NONE,

HIAI_MODELTYPE_ONLINE, AiModelDescription_DeviceType_NPU);

if (desc == nullptr) {

LOGE("[HIAI_DEMO_SYNC] LoadModelSync: desc make_shared error.");

ResourceDestroy(modelBuilder, memBuffers);

return FAILED;

}

/***SetModelBuffer**delBuffer functio**AiModelDescription**scription object.*/

desc->SetModelBuffer(buffer->GetMemBufferData(), buffer->GetMemBufferSize());

LOGE("[HIAI_DEMO_SYNC] loadModel %s IO Tensor.", desc->GetName().c_str());

modelDescs.push_back(desc);

}

/*Load the model using the model manager.*/

ret = client->Load(modelDescs);

ResourceDestroy(modelBuilder, memBuffers);

if (ret != 0) {

LOGE("[HIAI_DEMO_SYNC] Model Load Failed.");

return FAILED;

}

return SUCCESS;

}

shared_ptr<AiModelMngerClient> LoadModelSync(vector<string> names, vector<string> modelPaths, vector<bool> Aipps)

{

......

inputDimension.clear();

outputDimension.clear();

for (size_t i = 0; i < names.size(); ++i) {

string modelName = names[i];

bool isUseAipp = Aipps[i];

LOGI("[HIAI_DEMO_SYNC] Get model %s IO Tensor. Use AIPP %d", modelName.c_str(), isUseAipp);

vector<TensorDimension> inputDims, outputDims;

/*Obtain the dimension of the input and output from the OM model.*/

ret = clientSync->GetModelIOTensorDim(string(modelName) + string(".om"), inputDims, outputDims);

IF_BOOL_EXEC(ret != SUCCESS, LOGE("[HIAI_DEMO_SYNC] Get Model IO Tensor Dimension failed,ret is %d.", ret); return nullptr);

IF_BOOL_EXEC(inputDims.size() == 0, LOGE("[HIAI_DEMO_SYNC] inputDims.size() == 0"); return nullptr);

inputDimension.push_back(inputDims);

outputDimension.push_back(outputDims);

IF_BOOL_EXEC(UpdateSyncInputTensorVec(inputDims, isUseAipp, modelName) != SUCCESS, return nullptr);

IF_BOOL_EXEC(UpdateSyncOutputTensorVec(outputDims, modelName) != SUCCESS, return nullptr);

}

return clientSync;

}

int UpdateSyncInputTensorVec(vector<TensorDimension>& inputDims, bool isUseAipp, string& modelName)

{

input_tensor.clear();

vector<shared_ptr<AiTensor>> inputTensors;

int ret = FAILED;

for (auto inDim : inputDims) {

shared_ptr<AiTensor> input = make_shared<AiTensor>();

if (isUseAipp) {

ret = input->Init(inDim.GetNumber(), inDim.GetHeight(), inDim.GetWidth(), AiTensorImage_YUV420SP_U8);

LOGI("[HIAI_DEMO_SYNC] model %s uses AIPP(input).", modelName.c_str());

} else {

/*Create an input tensor and initialize the memory size.*/

ret = input->Init(&inDim);

LOGI("[HIAI_DEMO_SYNC] model %s does not use AIPP(input).", modelName.c_str());

}

IF_BOOL_EXEC(ret != SUCCESS, LOGE("[HIAI_DEMO_SYNC] model %s AiTensor Init failed(input).", modelName.c_str());

return FAILED);

inputTensors.push_back(input);

}

input_tensor.push_back(inputTensors);

IF_BOOL_EXEC(input_tensor.size() == 0, LOGE("[HIAI_DEMO_SYNC] input_tensor.size() == 0"); return FAILED);

return SUCCESS;

}

int UpdateSyncOutputTensorVec(vector<TensorDimension>& outputDims, string& modelName)

{

output_tensor.clear();

vector<shared_ptr<AiTensor>> outputTensors;

int ret = FAILED;

for (auto outDim : outputDims) {

shared_ptr<AiTensor> output = make_shared<AiTensor>();

/*Create an output tensor and initialize the memory size.*/

ret = output->Init(&outDim);

IF_BOOL_EXEC(ret != SUCCESS, LOGE("[HIAI_DEMO_SYNC] model %s AiTensor Init failed(output).", modelName.c_str());

return FAILED);

outputTensors.push_back(output);

}

output_tensor.push_back(outputTensors);

IF_BOOL_EXEC(output_tensor.size() == 0, LOGE("[HIAI_DEMO_SYNC] output_tensor.size() == 0"); return FAILED);

return SUCCESS;

}

int runProcess(JNIEnv* env, jobject bufList, jmethodID listGet, int vecIndex, int listLength, const char* modelName)

{

for (int i = 0; i < listLength; i++) {

jbyteArray buf_ = (jbyteArray)(env->CallObjectMethod(bufList, listGet, i));

jbyte* dataBuff = nullptr;

int dataBuffSize = 0;

dataBuff = env->GetByteArrayElements(buf_, nullptr);

dataBuffSize = env->GetArrayLength(buf_);

IF_BOOL_EXEC(input_tensor[vecIndex][i]->GetSize() != dataBuffSize,

LOGE("[HIAI_DEMO_SYNC] input->GetSize(%d) != dataBuffSize(%d) ",

input_tensor[vecIndex][i]->GetSize(), dataBuffSize);

return FAILED);

/*Fill the input tensor with the input buffer data.*/

memmove(input_tensor[vecIndex][i]->GetBuffer(), dataBuff, (size_t)dataBuffSize);

env->ReleaseByteArrayElements(buf_, dataBuff, 0);

}

AiContext context;

string key = "model_name";

string value = modelName;

value += ".om";

context.AddPara(key, value);

LOGI("[HIAI_DEMO_SYNC] runModel modelname:%s", modelName);

// before process

struct timeval tpstart, tpend;

gettimeofday(&tpstart, nullptr);

int istamp;

/***Process**e Process API of the model manager to perform model inference an**output_tensor**ut_tensor that stores the inference data.*/

int ret = g_clientSync->Process(context, input_tensor[vecIndex], output_tensor[vecIndex], 1000, istamp);

IF_BOOL_EXEC(ret, LOGE("[HIAI_DEMO_SYNC] Runmodel Failed!, ret=%d\n", ret); return FAILED);

// after process

gettimeofday(&tpend, nullptr);

float time_use = 1000000 * (tpend.tv_sec - tpstart.tv_sec) + tpend.tv_usec - tpstart.tv_usec;

time_use_sync = time_use / 1000;

LOGI("[HIAI_DEMO_SYNC] inference time %f ms.\n", time_use / 1000);

return SUCCESS;

}

After the model inference is complete, you will obtain the output data in output_tensor. You can process it subsequently as required.

protected void runModel(ModelInfo modelInfo, ArrayList<byte[]> inputData) {

/*Perform inference.*/

outputDataList = ModelManager.runModelSync(modelInfo, inputData);

if (outputDataList == null) {

Log.e(TAG, "Sync runModel outputdata is null");

return;

}

inferenceTime = ModelManager.GetTimeUseSync();

for(float[] outputData : outputDataList){

Log.i(TAG, "runModel outputdata length : " + outputData.length);

/*Process the output after the inference is complete.*/

postProcess(outputData);

}

}

Output:

Well done. You have successfully completed this codelab and learned how to:

For more information, please click the following link:

Related documents

To download the source code, please click the button below: